Kubernetes is the hotness in the cloud/virtualization space right now. The last time I really was able to focus on virtualization was when Openstack was the hotness, (which feels like it wasn’t that long ago). Though I have had a general idea of what Kubernetes is and what it does, I haven’t felt comfortable with the various elements of abstraction at the hardware and software layer so I felt it was time to do a refresh for myself on virtualization.

This post is my own exploration of Kubernetes to educate myself on what it is, why it matters, and how it works. I wrote this article because writing is part of my own learning process and helps me digest complex concepts quickly.

Kubernetes is a tool that deploys your apps on container infrastructure

Kubernetes is a automation system that was built up using best practices at Google. The benefit of using Kubernetes is that you can deploy, scale, and manage applications on top of containers without having to worry about how many containers you have. It is a layer of abstraction on top of a container management tool, like Docker.

Okay, but What’s a container?

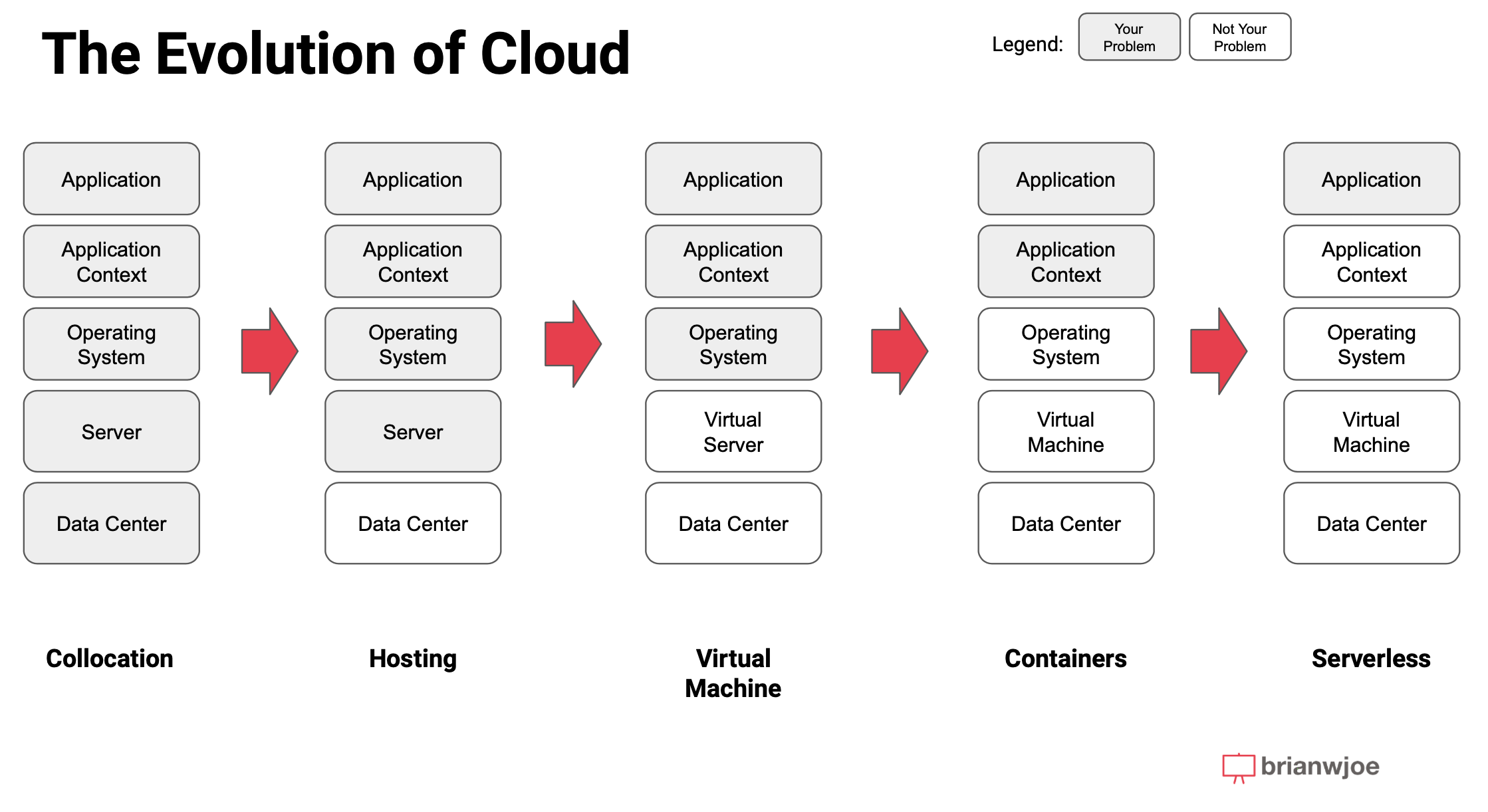

A container is the next evolution of virtualization past the virtual machine. It is the virtualization of an operating system that lets you run more than one virtual operating system at the same time on a single machine. This is similar to how virtual machines let you run more than one virtual server on a single physical server.

The most commonly used software tool to virtualize operating systems is Docker Engine, which is program that runs on a physical (or virtual) machine and lets you run multiple virtual operating systems on top of it sharing the same resources of the single machine, but isolated so that each operating system thinks it’s by itself. (This is similar to the Xen hypervisor for VMs, which lets your run multiple virtual machines on top of a single physical server. Docker Engine is bundled with other tools into a platform hat is referred to as Docker.

Here’s another diagram that illustrates the differences in virtual machines (Hypervisor) vs. containers (Docker Host):

But If I have Docker, why do I need Kubernetes?

Docker is sufficient if you are running on a single physical machine, like your laptop. You can use Docker to create / tear down lots of virtual operating systems and do things with them like create development environments.

What if you want to run really expensive, heavy apps that require more resources than a single machine can handle? That’s where you would want to run Docker on multiple machines, but then to coordinate them centrally and deploy apps to them, you would need a tool like Kubernetes.

In short, Kubernetes can be used to define your apps, how many resources they require, and schedule/map them on to all of the various machines you have running Docker. It’s primary role is as a broker / matchmaker.

How does Kubernetes Work?

The following is a summary of this article which helped me understand Kubernetes: https://medium.com/google-cloud/kubernetes-101-pods-nodes-containers-and-clusters-c1509e409e16. Thank you Google Cloud Developer Blog!

How does Kubernetes virtualize Hardware?

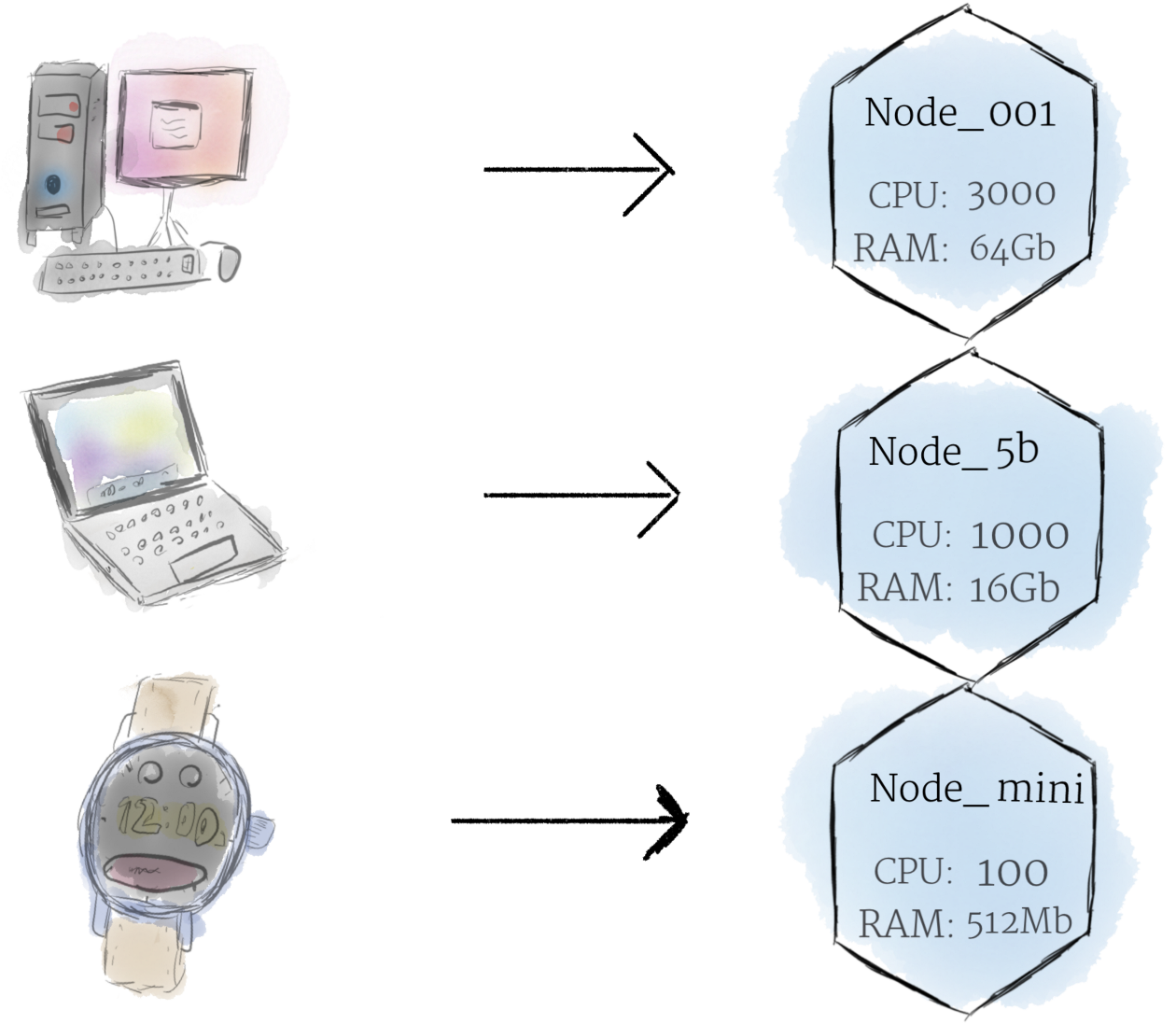

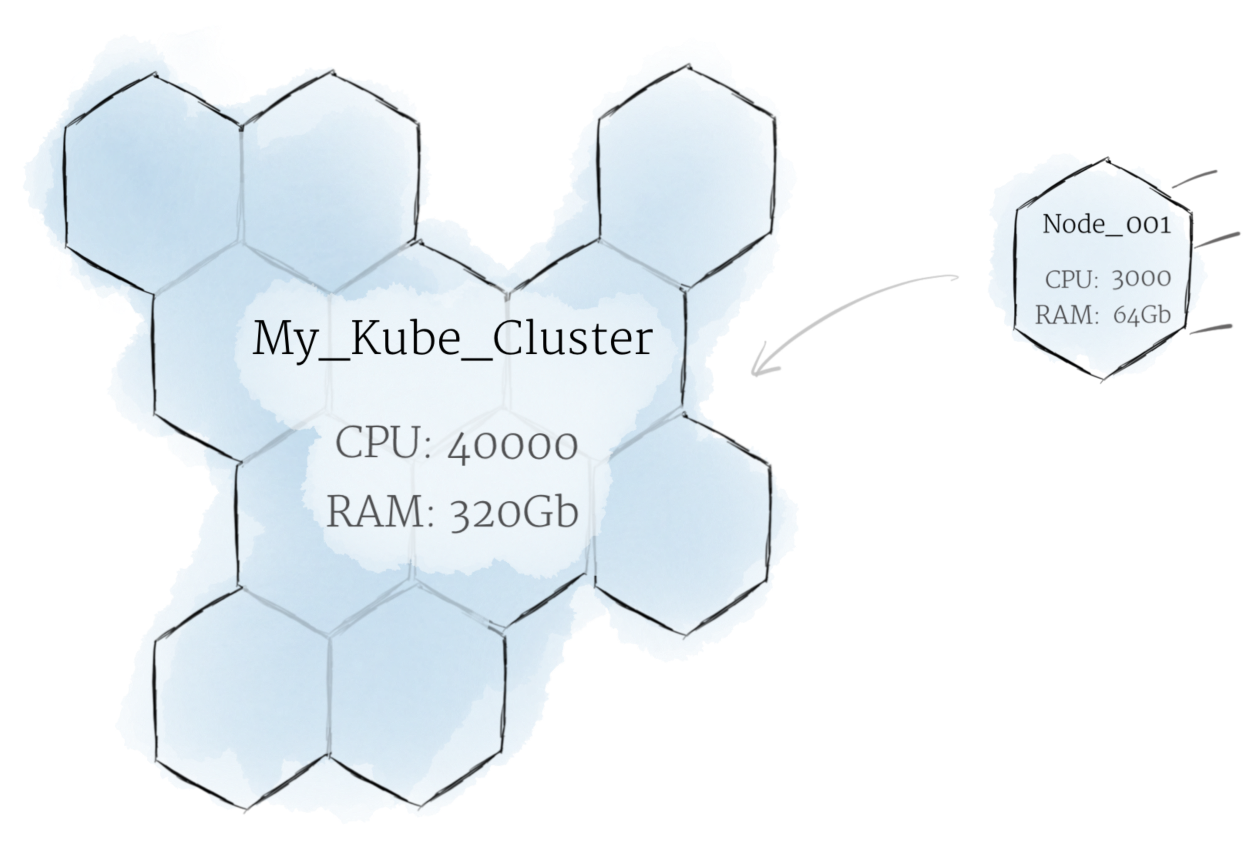

Nodes – Abstractions of CPU and memory. Comprised of physical machines that are running Kubernetes. Could be anything.

Cluster – collections of nodes. Put more than one node together and you have a cluster.

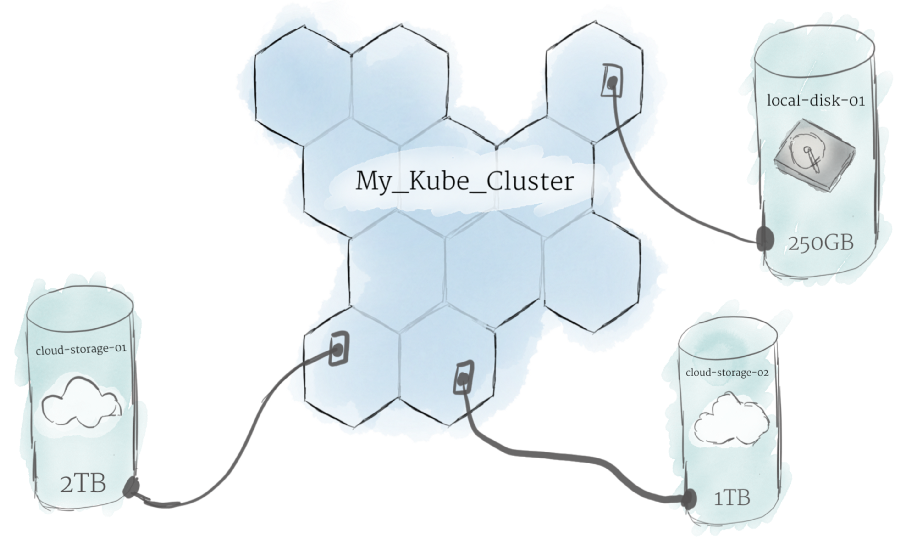

Persistent Volumes – Persistent volumes is the Kubernetes abstraction for storage. In Kubernetes, local storage is used as a temporary cache and persistent volumes are used as longer term data storage. This is due to the ephemeral nature of apps and workloads, if an app starts in one node but moves to another, it might not have access to the local data.

How does Kubernetes virtualize software?

Kubernetes has a few different software abstractions:

Containers – I talked about this earlier in this post! A package of application and dependencies running on a virtual Operating System.

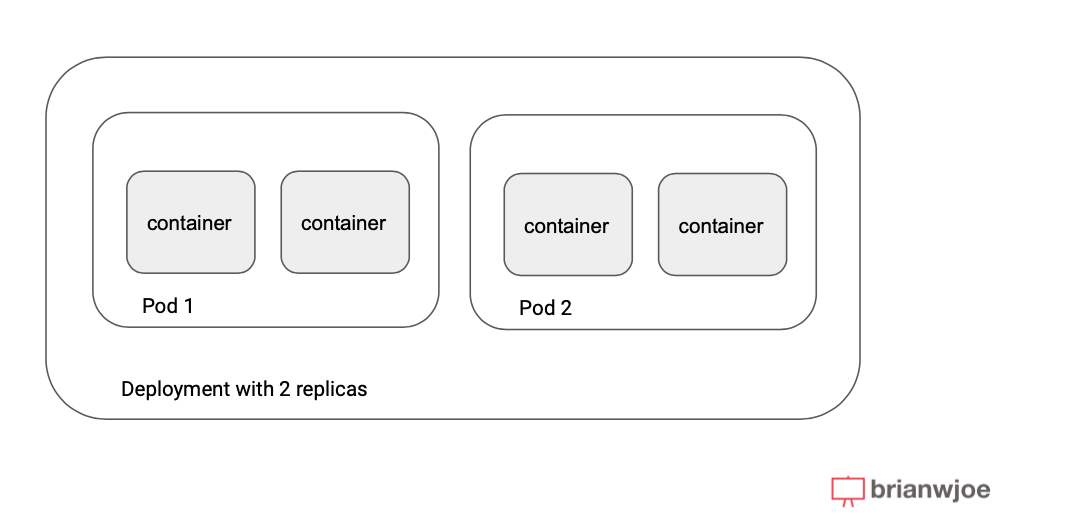

Pods – Kubernetes doesn’t manage raw containers. It creates a higher level of abstraction for containers called Pods. Pods are groups of containers, and within a pod all the containers share the same resources (like local storage and network). Communication between containers within a pod is very easy, and is similar to if they were on the same machine. Pods are the unit of scale in Kubernetes – when scaling events are triggered then additional pods are spun up (not individual containers).

Deployments – A deployment is a group of Pods. The purpose of a deployment is to specify how many replicas of a pod should be running together. For example, a common pattern might be 3 replicas for resiliency purposes.

How does Kubernetes Virtualize Networking?

Networking has historically been the last frontier of virtualization. (Not that many) Years ago, we had a whole team at Verizon focused on software defined networking (SDN) and Network Function Virtualization (NFV) to standardize which network virtualization protocols to use within the company, partnering with startups like Contrail, Nuage, and Nicira. Kubernetes has now open sourced a solution to this problem at scale, using the following networking default rules within Kubernetes.

Within a Pod – within a pod, each container can communicate with one another as if they are on the same machine via localhost.

Between Pods – Each pod is assigned an IP address, and each pod can communicate with any other pod (on any node in the cluster) via that IP address.

Outside the Cluster – to communicate outside the cluster, an ingress point must be defined which is a service endpoint for apps. This is especially true for internet facing traffic / web servers. Managing all of these ingress points can get tricky and so a new concept of service meshes like Envoy and Istio can be bolted on top of Kubernetes to manage more dynamic networking / software defined networking environments.

What’s Next?

So far, I’ve provided a brief overview of the virtualization approaches of Kubernetes. As a PM it’s not likely that I’ll be responsible for setting up a Kubernetes cluster – but my customers will – and so the next step for me is understanding a layer deeper at the Kubernetes architecture level and the different components of Kubernetes such as the Master Node and the Worker Nodes. I’ll explore that in a later post.